This is a article to my future self, in case I need to resize a btrfs partition to maximum size (or full capacity) or shrink it while being mounted.

TL;DR

Expanding a partition

Assuming your partition is /dev/sda2 mounted as /

# parted /dev/sda

(parted) resizepart 2 100% # 2 is for /dev/sda2 and 100% for full available capacity

Then tell btrfs that it should use all of the available space

# btrfs filesystem resize max /

Bonus points for checking the filesystem state afterwards.

Shrinking a partition (online)

Note: tmux is your friend!

Shrinking /dev/sda2 (mounted as /) by 5 GB (from 20 GB to 15 GB)

# btrfs filesystem resize -6G / # 6 and not 5 GB to have a safety margin (GiB vs GB issues ecc)

# parted /dev/sda

(parted) resizepart

Partition number? 2

End? [20.0GB]? 15GB # CAVE: End of partition, NOT SIZE!

# partprobe

# btrfs filesystem resize max /

Also here: Bonus points for checking the filesystem state afterwards.

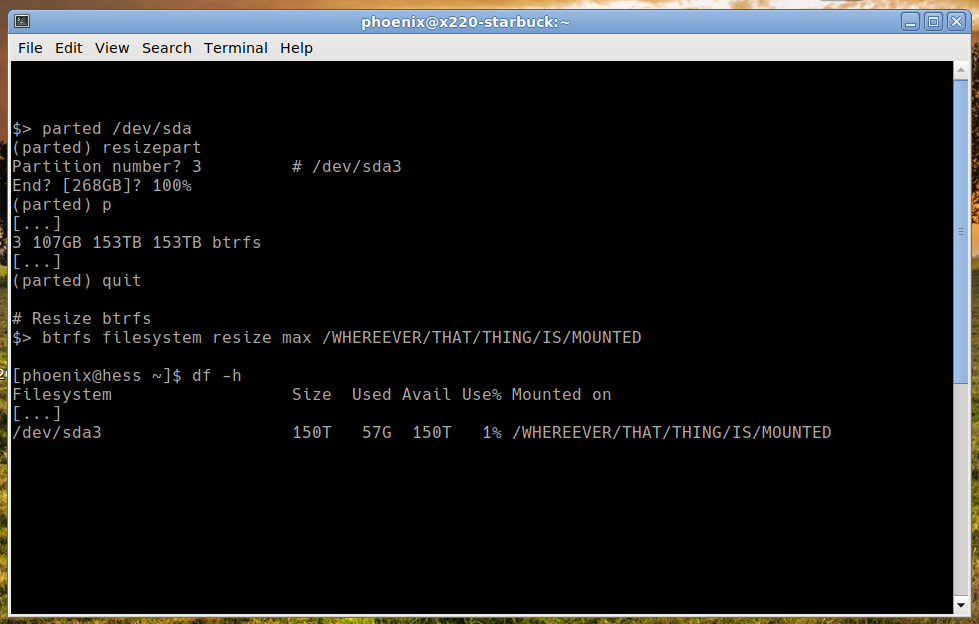

Walkthrough

This example is valid for shrinking and expanding a btrfs filesystem. One of the awesome features of btrfs is that is supports online resizing, i.e. it can be extended/shrinked while being mounted. While being awesome this always feels kind of like an operation on the open heart to me.

The following example is valid for shrinking and expanding a system. Skip step 1 if you want to expand your filesystem.

1. When shrinking a partition, first shrink the btrfs filesystem. I’m assuming you want to shrink /dev/sda2 (mounted as /) by 5 GB from 20 GB to 15 GB. To prevent possible issues with Gibibyte vs Gigabyte conversion ecc., I highly recommend you to shrink the filesystem to a size which is 20% smaller the desired final size. The value of 20% comes from conversion factors plus safety margin and is explained en detail in the section below. For 5 GB this means you want to shrink it by 6 GB at first

# btrfs filesystem resize -6G /

2. Resize the partition using parted. Here we assume we want to resize /dev/sda2

# parted /dev/vda

(parted) resizepart

Partition number? 2 # /dev/sda2

End? [20GB]? 100% # 100% for max. available space or

End? [20GB]? 15GB # shrink to 15 GB

(parted) p # check if successfully

[...]

2 106MB 20GB 20GB btrfs

(parted) q

3. Mount the filesystem (if not yet done) and tell btrfs to resize to max. available capacity

# mount /dev/sda2 /

# btrfs filesystem resize max /

4. Check if everything looks fine

# btrfs filesystem df /

Data, single: total=20.01GiB, used=4.24GiB

System, single: total=32.00MiB, used=48.00KiB

Metadata, single: total=1.01GiB, used=1.75GiB

GlobalReserve, single: total=293.48MiB, used=0.00B

# df -h

[...]

/dev/sda2 20G 4.24G 15.76G 21% /

/dev/sda2 20G 4.24G 15.76G 21% /srv

/dev/sda2 20G 4.24G 15.76G 21% /opt

/dev/sda2 20G 4.24G 15.76G 21% /tmp

[...]

If everything looks sane, you’re good to go :-)

Estimation of the safety margin for shrinking

There is often a lot of confusion when dealing with storage about the 1000 vs 1024 storage unit conventions. In order to be on the safe side, I normally assume the worst and add an additional safety margin atop of this.

So since storage is typically dealt nowadays in GB and TB you have a discrepancy of about 7% for GB vs GiB and 10% between TB and TiB:

GiB/GB: (1024^3) / (10^3)^3 = 1.0737 or 7%

TiB/TB: (1024^4) / (10^3)^4 = 1.0995 or 10%

PiB/PB: (1024^5) / (10^3)^5 = 1.1259 or 12.5%

Now doubling this discrepancy results in my suggested 20% safety margin for TB scale storage systems. It’s a simple back-of-the-envelope estimation which I consider safe for sane filesystem use-cases.

I’m also assuming people who manage storage in the Petabytes do not rely/trust the blog posts of a random peasant on the internet :-)