Disclaimer: I really don’t want to criticize or even blame anyone. I love btrfs and think this is one of the most amazing filesystems on this planet. This is a documentation about a use case, where I have some issues. All systems have their flaws.

I am setting up a new server for our Telescope systems. The server acts as supervisor for a handful of virtual machines, that control the telescope, instruments and provide some small services such as a file server. More or less just very basic services, nothing fancy, nothing exotic.

The server has in total 8 HDD, configured as RAID6 connected via a Symbios Logic MegaRAID SAS-3 and I would like to setup btrfs on the disks, which turns out to be horribly slow. The decision for btrfs came from resilience considerations, especially regarding the possibility of creating snapshots and conveniently transferring them to another storage system. I am using openSUSE 15.0 LEAP, because I wanted a not too old Kernel and because openSUSE and btrfs should work nicely together.

The whole project runs now into problems, because in comparison to xfs or ext4, btrfs performs horribly in this environment (factor of 10 and more off!).

The problem

The results as text are listed below. Scroll to the end of the section

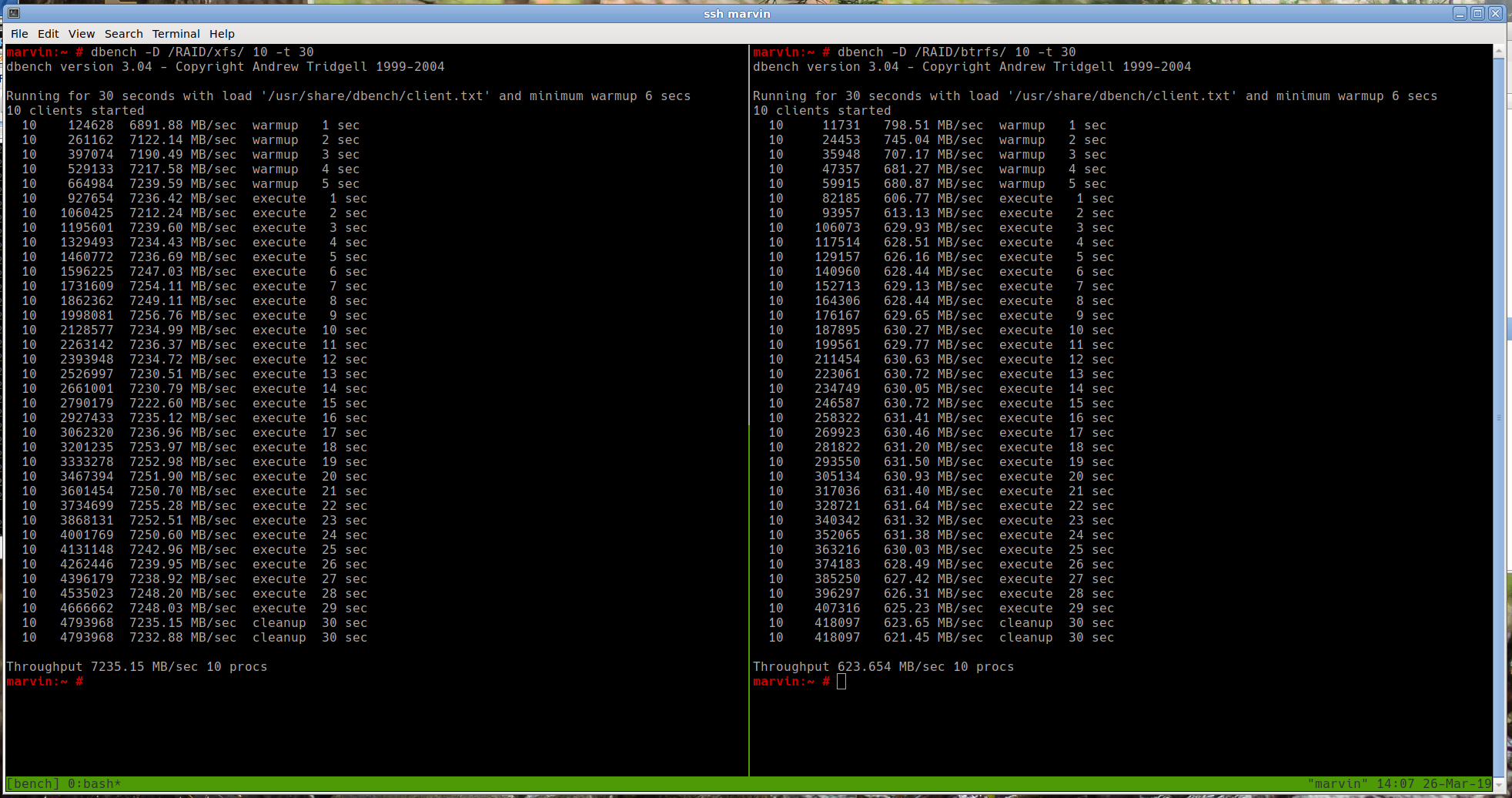

I use the tool dbench to measure IO throughput on the RAID. When comparing btrfs to ext4 or xfs I notice, the overall throughput is about a factor of 10 (!!) lower, as can be seen in the following figure:

Throughput measurement using dbench - xfs’s throughput is 11.60 times as high as btrfs !!!

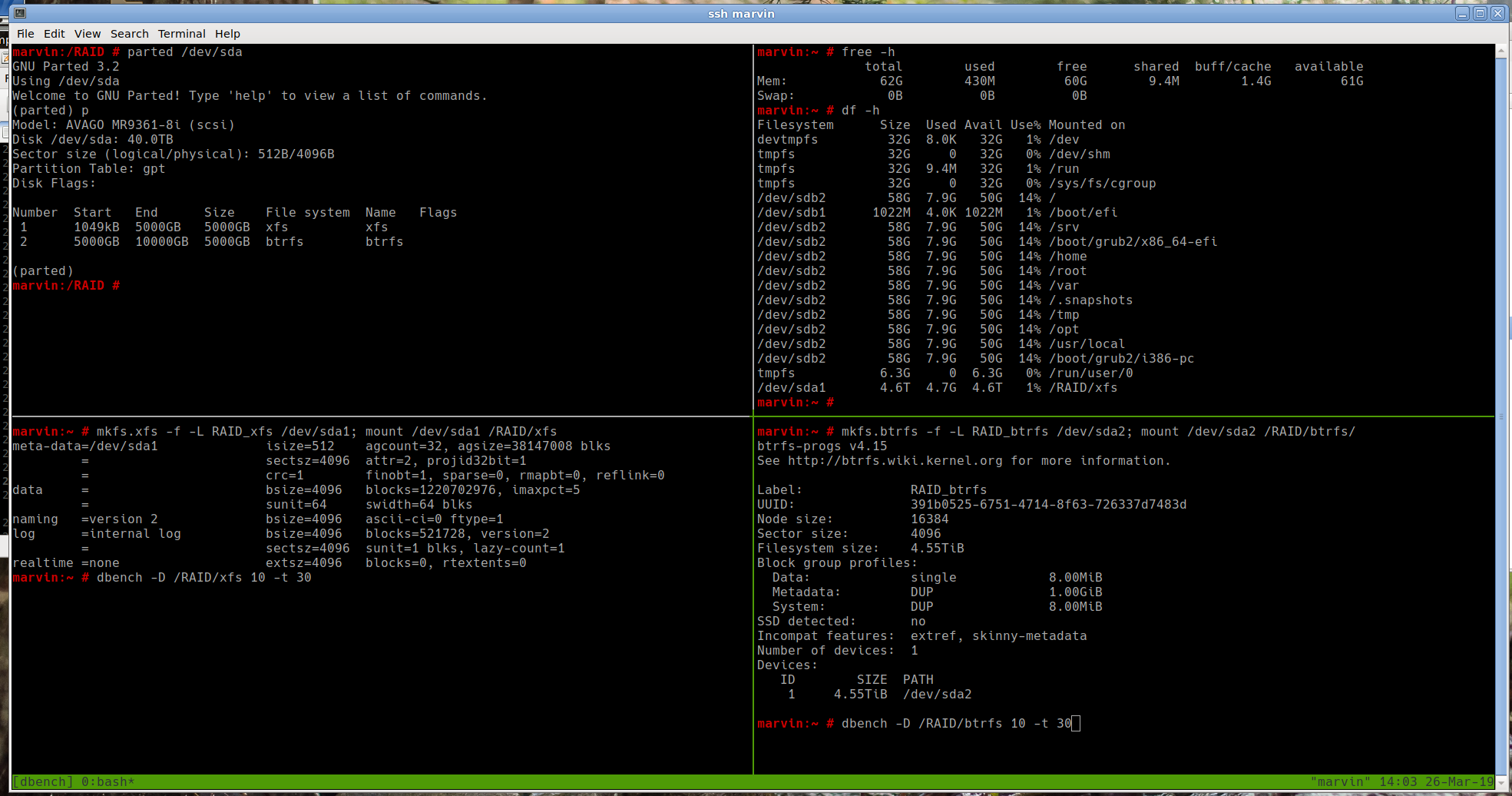

sda1 and sda2 are obviously the same disk and created with default parameters, as the following figure demonstrates

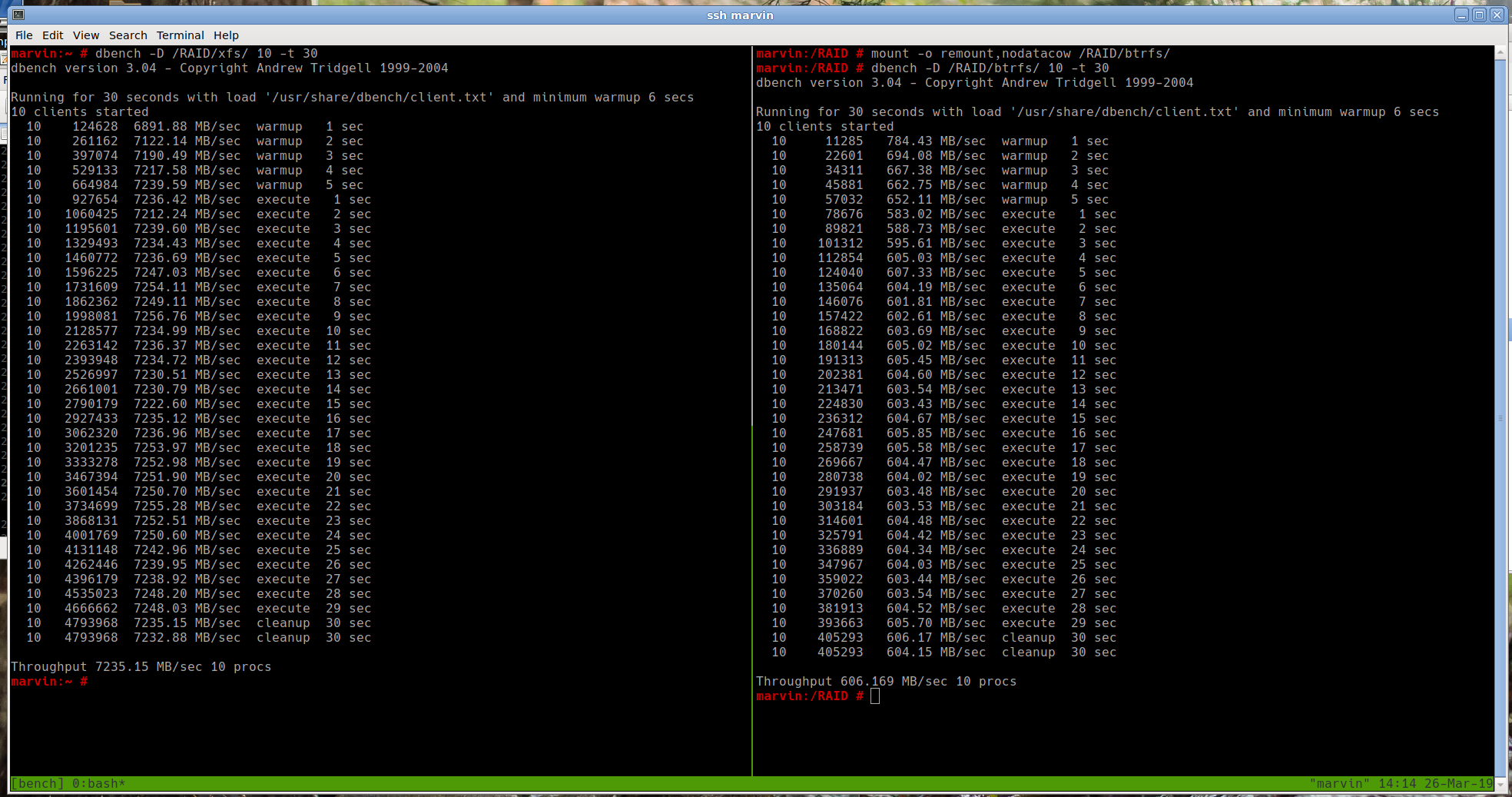

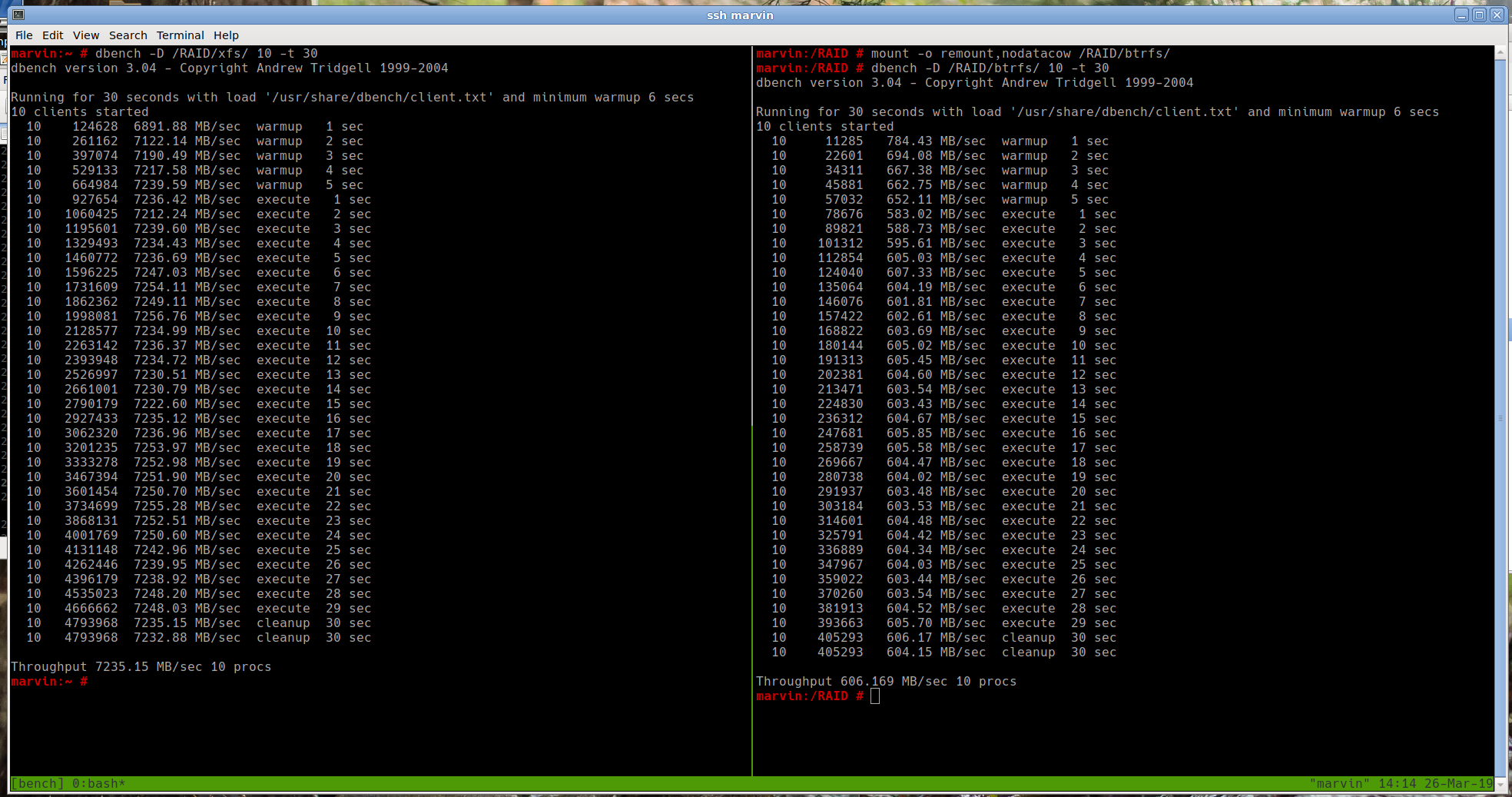

My first suspicion was, that maybe cow (copy-on-write) could be the reason for the performance issue. So I tried to disable cow by setting chattr +C and by remounting the filesystem with nodatacow. Both without any noticeable differences as shown in the next two figures

Same dbench run as in the beginning, but with

Same dbench run as in the beginning, but with nodatacow mount option. Still, negligible difference

Hmmmm, looks like something’s fishy with the filesystem. For completion I also wanted to swap /dev/sda1 and /dev/sda2 (in case something is wrong with the partition alignment) and for comparison also include ext4. So I reformatted /dev/sda1 with the btrfs system (was previously the xfs filesystem) and /dev/sda2 with ext4 (was previously the btrfs partition). The results stayed the same, although there are some minor differences between ext4 and xfs (not discussed here). The order of magnitude difference between btrfs and ext4/xfs remained

Swapping

Swapping /dev/sda1 and /dev/sda2 and testing dbench on ext4 yields the same results: btrfs performs badly on this configuration

So, here are the results from the figures.

| Disk | Filesystem | Throughput |

|---|---|---|

/dev/sda1 |

xfs | 7235.15 MB/sec 10 procs |

/dev/sda2 |

btrfs | 623.654 MB/sec 10 procs |

/dev/sda1 |

btrfs ``chattr +C` | 609.842 MB/sec 10 procs |

/dev/sda1 |

btrfs (nodatacow) | 606.169 MB/sec 10 procs |

/dev/sda1 |

btrfs | 636.441 MB/sec 10 procs |

/dev/sda2 |

ext4 | 7130.93 MB/sec 10 procs |

And here is the also the text file of the detailed initial test series. In the beginning I said something like a factor of 10 and more. This relates to the results in the text field. When setting dbench to use synchronous IO operations, btrfs gets even worse.

Other benchmarks

Phoronix did a comparison between btrfs, ext4, f2fs and xfs on Linux 4.12, Linux 4.13 and Linux 4.14 that also revealed differences between the filesystems of varying magnitude.

As far as I can interpret their results, they stay rather inconclusive. Sequential reads perform fastest on btrfs, while sequential writes apparently are kind of disappointing (Factor 4.3 difference). Those are synthetic benchmarks though and might not reflect how real-world workload behave.